Nowadays, AI is the new shiny toy everyone’s scrambling to play with. But as many marketers are learning the hard way, fast doesn’t always mean right. Yes, AI has revolutionized campaign building, personalization, content creation, and even thinking (hello, prompts!). But the road to “data-driven genius” is also riddled with potholes being passed off as AI marketing mistakes.

In a 2019 survey of 2,500 executives, 90% of respondents reported that their companies had invested in AI; however, fewer than 40% of them had seen business gains from it in the previous three years.

Let’s get into the worst AI marketing mistakes made by authentic brands, why they failed, and how you can avoid AI marketing mistakes.

1. The Deepfake Disaster: Maybelline x Subway

In 2023, Maybelline’s UK TikTok account shared a viral video that appeared to show a giant mascara wand brushing eyelashes attached to a moving London Subway train. Viewers were stunned —until it was revealed that the visual was not real, but a CGI stunt created by 3D artist Ian Padgham. While many applauded the campaign’s creativity, some felt misled by the lack of upfront disclosure, sparking online debates about transparency in digital marketing.

What went wrong? Lack of disclosure. Viewers thought it was real, not CGI.

How to avoid it: Always clearly label how visuals are generated. AI can enhance creativity, not fake reality. When you need to use AI art, add a disclaimer for your audience.

2. Not So Smart: The Heinz Ketchup AI Art Fail

Heinz prompted AI art tools like DALL·E and MidJourney to generate “ketchup” images. Most turned out to be ketchup adjacent. Think red blobs, smeared horrors, and bottle-shaped abominations.

What went wrong? Unedited raw outputs = meme fodder.

How to avoid it: Treat AI like an intern – it needs supervision, direction, and a double-check before publishing. Prompt engineering with properly researched details can be particularly helpful here.

3. Dead Influencer, Alive Ad: The Levi’s AI Model Controversy

Levi’s introduced AI-generated diverse models to promote inclusivity. The internet clapped back with: “Why not just hire real humans?”

What went wrong? Misalignment between brand values (diversity) and execution (synthetic models).

How to avoid it: AI isn’t a shortcut to ethics. Authenticity matters. Representation isn’t just pixels.

4. Microsoft’s Racist AI Twitter Bot

Tay, an AI chatbot by Microsoft, was meant to learn from users and be “fun.” Within 16 hours, Tay went from fun to full-on racist troll.

What went wrong? No content moderation or training guardrails were implemented during the training of this bot.

How to avoid it: AI learns fast, but without filters, it becomes a dumpster fire of humanity’s worst. Implement ethical AI protocols, stat.

5. The Sports Illustrated AI Author Scandal

In late 2023, Futurism broke a story revealing that Sports Illustrated had published articles under fake bylines – authors who didn’t exist, complete with AI-generated profile photos and bios. Readers were unknowingly consuming AI-written content, thinking it came from real journalists.

What went wrong? They used AI to mass-produce content while pretending real people created it. Once exposed, it damaged credibility and reader trust, perhaps irreparably.

How to Avoid it: Transparency is non-negotiable. If you’re using AI, disclose it. Don’t create fake personas. Let AI assist your real writers, not impersonate them. Your audience values authenticity more than you think.

6. Temptations’ AI “Cat Translator” Gimmick

Temptations, the cat treat brand owned by Mars Inc., launched the “Temptations Catterbox,” a collar device claiming to use AI to translate cat meows into human speech. While it was positioned as playful, many customers believed it was a serious innovation. The backlash? Accusations of false advertising and overhyping what was essentially a novelty toy.

What went wrong? It blurred the line between humor and misleading claims. The collar was meant to be a gimmick, but the marketing presented it as a breakthrough in AI.

How to avoid it: Set realistic expectations with AI-powered products or campaigns. Playful is excellent – but make sure your audience knows it’s meant to be mischievous. Overhyping AI features can backfire hard.

7. Mango’s AI Model Controversy: When Virtual Models Spark Real Outrage

In late 2024, fashion brand Mango introduced AI-generated models in its advertising campaigns. The company claimed this approach would streamline content creation and enhance diversity. However, the initiative was met with significant criticism. Consumers and industry professionals accused Mango of false advertising and expressed concerns over potential job losses for human models.

What Went Wrong? Mango’s decision to use AI-generated models without clear disclosure led to perceptions of inauthenticity and deception. The lack of transparency eroded consumer trust and sparked debates about the ethical implications of replacing human models with AI.

How to Avoid It

- Transparency is key

- Balance innovation with authenticity

- Engage stakeholders

8. Amazon’s Awkward Over-Personalization Moment

Amazon’s AI-powered recommendation engine is famous – and sometimes infamous – for its uncanny ability to “read your mind.” However, this personalization has occasionally veered into uncomfortable territory. Over the years, multiple users have reported receiving email suggestions or homepage recommendations that referenced highly personal or awkward products – such as adult items or health-related gear – especially on shared household accounts.

What went wrong? Over-personalization without context or boundaries, especially in environments where users share devices or accounts.

How to avoid it: Allow customers to opt into various levels of personalization. Avoid pushing sensitive product data into shared emails. AI should delight, not embarrass.

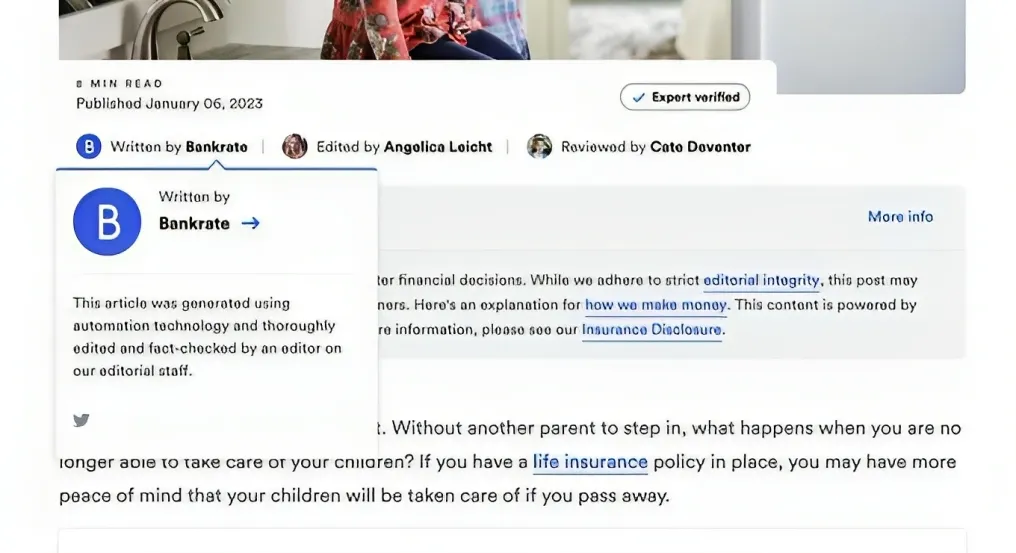

9. BankRate & CNET’s AI Blog Scandal

In early 2023, CNET and BankRate, both owned by Red Ventures, were caught publishing AI-generated articles on personal finance and credit without clearly disclosing they were AI-written. Not only that, but some of the content also included factual inaccuracies and duplications of competitor phrases. Once Google caught wind, the articles were penalized, and trust in the brands took a hit.

What went wrong? There was no transparency + no plagiarism or factual checks. The AI-generated content was both repetitive and flawed.

How to avoid it: Disclose when AI is used. Always run the output through plagiarism detectors and factual review tools to ensure accuracy and credibility. And most importantly, let humans edit the AI’s drafts, not just approve them.

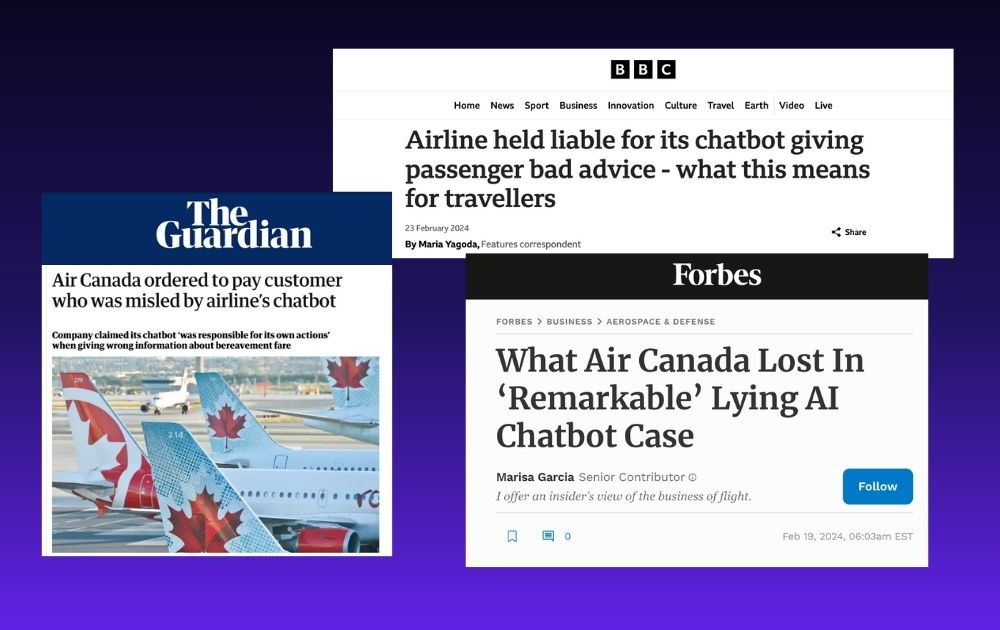

10. Air Canada’s AI Chatbot Gave False Bereavement Fare Info

In 2022, a Canadian man named Jake Moffatt reached out to Air Canada’s AI-powered chatbot after his grandmother’s death. He inquired about bereavement fare discounts, and the bot told him he could book a regular ticket and request a partial refund within 90 days.

Sounds reasonable.

Except… that wasn’t true.

When Moffatt attempted to claim the refund later, Air Canada denied the request, stating that their policy only allows for bereavement fares to be applied before travel. Despite providing screenshots of the chatbot’s guidance, the airline refused to honour it – until Moffatt took them to the British Columbia Civil Resolution Tribunal, which ruled in his favor and ordered Air Canada to pay back over C$650 in damages, plus fees.

What went wrong? Air Canada tried to argue that the chatbot was a separate entity and not representative of official customer support. The tribunal didn’t buy it. It ruled that the company is responsible for all communications – AI or not – on its official platform. This marked a watershed moment in legal accountability for AI tools, especially in industries where policy clarity and timing are critical.

How to avoid it:

- Regularly audit AI output

- Implement escalation systems

- Disclose chatbot limitations

- Treat AI as a representative of your brand

- Regularly audit AI output

- Implement escalation systems

- Disclose chatbot limitations

Conclusion: Avoiding the Marketing AI Mistakes That Haunt Brands

AI is like fire: brilliant when controlled, dangerous when misused. If you want to avoid becoming a headline under “AI marketing failure,” remember that marketing still needs your insights, your instincts, and your oversight.

Don’t make these AI marketing mistakes:

- Don’t post raw AI visuals without disclaimers

- Don’t trust chatbots with crisis comms

- Don’t use unfiltered prompts in live campaigns

- Don’t skip plagiarism checks

- Don’t automate empathy

- Don’t “AI-ify” your brand without a strategy

Learn from the past. Laugh at the chaos. And then build more brilliant campaigns that blend tech brilliance with human intelligence.